Conflicts in Categorizing

Source: Me with Microsoft Paint.

Source: Me with Microsoft Paint.Disclaimers

- I use the word category quite loosely in this piece. I do not refer to the mathematical field of Category Theory, although there may be some interesting, unexplored connections there.

- I use category, class (the machine-learning term, not the economic term), label, and group interchangeably. I have tried to make the meanings of these terms clear from context.

- I do not claim to speak for any group of which I am not a part; I also recognize the possibility and importance of trying to understand experiences that are not my own.

I recently gave a talk about some problems with fair machine learning. Throughout the talk, I had one nagging thought. In the field of fair machine learning, we’re often interested in “protected attributes”, like race, against which we do not want to discriminate. While there are certainly numerous issues with the conception of fairness as just non-discrimination (see my slides)–even the name “protected attributes” neglects the importance of affirmative acion–, the problem that I wish I had had more time to discuss is the reification of the social categories through continued usage of them.

By reification, I mean to say that continued use of a label reinforces the power it has in describing reality. For instance, imagine that we start to call people whizzletons (I just made this up) based on some biological or social characteristic. Although the use of whizzleton certainly is strange at first glance, we could imagine the word gaining broader acceptance as more and more people identified with it, however unlikely that acceptance might be. Even if one does not identify as a whizzleton, the word might become a familiar part of the lexicon as discussion of the group increases in tandem with media representation. Broader social events might also precipitate the popularity of the label, just as Christianity shed some of its stigma after its adoption by the Roman Empire. After some years, whizzleton might be as natural a label as communist.

This example was imaginary, but the principle is important. Reification of social categories can have a positive spin, providing legitimacy to an identity that heretofore had been marginalized. For categories that are unjustly a source of opprobrium, members of the group can reclaim linguistic and social markers that heretofore were defined for them. LGBTQ2S+ pride movements, for instance, bring needed light to identities that for too long have been shrouded in the dark. I remember a time when many still considered gay to be a slur; it may still remain so for some people, and I do not wish to minimize their experiences or invalidate their understandings of the word. At the same time, a massive shift has occurred in the past couple of decades: especially with younger people, gay connotes positive connection to a meaningful community, one which for the most part had been left in shadows like don’t ask, don’t tell. The reification of this social category promotes the idea that it is not only acceptable to be who one is, but that one should be proud of it; one should loudly resist all silencing and exercise the liberty to define one’s own good life.

Furthermore, ignoring the existence of these social categories can do more harm than good. For example, race-blind policies can nevertheless fail to address, or even magnify, the inequality and disproportionate violence that racialized peoples already face. Although the police are ostensibly race-blind, Black and Indigenous peoples still bear the brunt of police violence. After WWII, the GI bill, although meant to be race-neutral, neglected Black soldiers in practice. Although one might minimize this harm by claiming that nobody was hurt, I would maintain that this inequality itself, especially in light of the history of racial dynamics, is an insult to dignity. This is not even touching upon the vast racial wealth gap, where it is plausible that Black families benefit relatively less from neutral economic policies if gains accrue to the top, as they have recently done. All of this is to say that without policies that target race (i.e., without affirmative action), inequality can remain stagnant or widen.

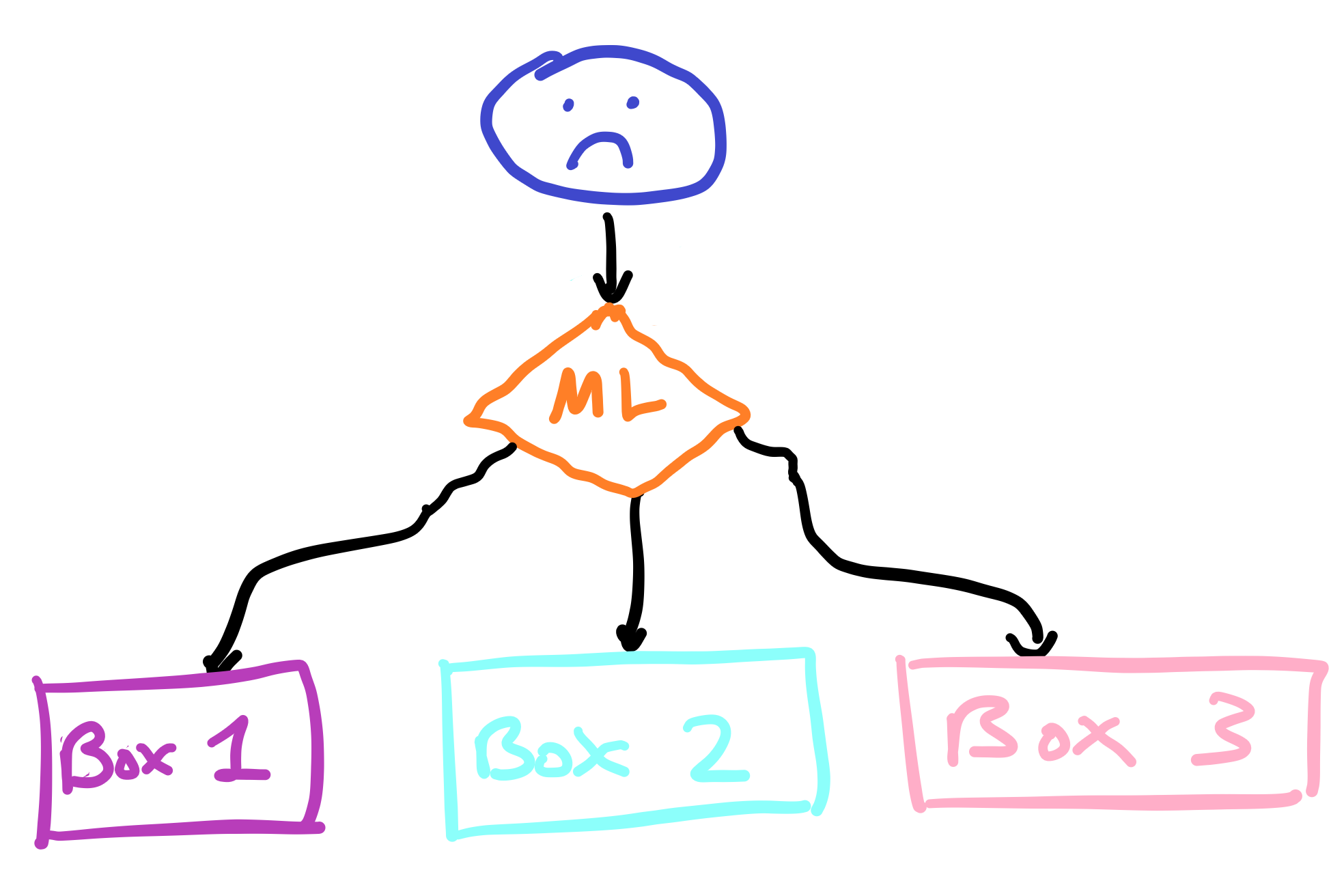

At the same time, continued use of a category can make it impossible to challenge or negotiate its definition. This problem is especially relevant in machine learning, where many researchers focus on the classification of objects into distinct classes. These classes are usually pre-defined by the designer of the system or the task, ranging from mundane (types of fruits) to politically contentious (racial categories), all to varying degrees implicating complex presumptions about the nature of perception, history, and power.

What are the harms of such reification? One is that it reduces our power to define our own identities. Especially if we exist at the intersections of socially recognized identities (e.g., biraciality), categorization into one class or another could itself be an infringement upon the liberty of self-construction. A second harm concerns the historical construction of categories. As this work and its references therein discuss, the construction of racial categories proceeded through the partitioning of life outcomes: Black as a racial label derives much significance from the theft of lives across the Atlantic to fields where they and their descendants were subjugated to servitude. The source of its significance has shifted as typified by the Harlem Renaissance, but such shifts may not always occur for everybody who assumes the label.

I can speak more fully about my own racial categories. The construction of the Asian racial category is connected to a history of exclusion, from fears of yellow peril to discriminatory immigration and citizenship policies. Based on phenotype, I am classified as other, not always fully a member of this country. I have not fully reclaimed the label of Asian, much less anything racial to do with the colour yellow. I do value my identity, but do not derive that value from the fact of my racial category; rather, the value comes from a connection to cultural worlds. When I was last in China, the most tangible feeling throughout my trip was the intangibility of my race. As I floated like a cloud through the streets, I was aware that nobody was particularly aware of me, that there was no racial barrier to overcome in any interaction with a stranger. This is not to say that China is homogeneous, itself home to a diversity of peoples and cultures, but that I appeared to fit in with what I perceived to be the majority. Given my description thus far, an ethnic category might seem like a better fit than a racial one, but that’s not quite right either. I don’t identify particularly strongly with anything as clear-cut as Chinese, let alone Vietnamese. Asian-Canadian is a bit too vague to be useful, given the large quantity of immigration to Canada and the varying degrees to which people self-identify with labels.

To summarize my discussion so far, although it can be useful to use social categories, avoiding so may sometimes be more desirable. It’s not that we shouldn’t have any categories at all: to operate in daily life, they are necessary. A knife is in the category of a tool I can use to slice vegetables, while a chair is in the category of things that I can sit in. I can’t (shouldn’t) sit on a knife, any more that I can use a chair to slice vegetables. That’s not to say, however, that these categories are static. Knives can also be used as weapons, depending on the circumstance, while a chair can also be a (dangerous) stepping stool. The category of an object can change with time and place too; what was once a cooking pot could now be an artifact through which we peer into the lives of those who came before. This fluidity should extend to identities: I should have the power to negotiate the meaning others attach to my label.

Currently dominant paradigms in machine learning do not explicitly allow such flexibility. To the extent that machine learning is an extension of society, humans can go into the system and change categories according to the evolution of our understandings. Yet, in the systems themselves, and especially in supervised learning, one typically assumes that the labels represent some static truth about the data points. In addition to possible fairness constraints, one should maintain a high degree of accuracy with respect to this ground truth. Adherence to this paradigm of accuracy sustains the underlying assumptions of the task, regardless of their validity. For instance, using just race as a category does not account for the effects of differing skin tones. Within groups of people categorized as the same racially, colourism and different error rates in classification exist.

What would it mean to imbue machine learning algorithms with the capability to adapt categories to the data? This work suggests using unsupervised learning, instead of supervised learning, to infer categories that correspond to patterns of racial discrimination. In other words, instead of assuming the existence of labeled data, unsupervised learning would attempt to find patterns hidden in the data and assign labels according to those patterns. Such categories may often be consistent with race, but otherwise may adapt to the intricacies of the problem at hand. I don’t think, however, that using unsupervised learning is a simple solution. Given that humans also design unsupervised learning algorithms, a given algorithm is predisposed to discovering certain patterns from data and not others. Moreover, while unsupervised category discovery may be useful in some circumstances, such algorithms may become race-blind policies in another guise. Failure to detect patterns of abuse could be seen as evidence of its absence, disempowering the movements for justice that so many need.

In the absence of AI systems that are critical (whatever that means in the AI context) of pre-defined categories, I think it advisable to develop strong norms of transparency and accountability. The categories used in applications, machine learning or otherwise, should be open to public contestation, with officials that are responsive to demands for change and sympathetic to the voices of the relatively powerless.

Acknowledgements

Thanks to Iara Santelices, Rudra Patel, and Kevin Wang for reading over a draft of this post.

Some Works

Racial Categories in Machine Learning

Design Justice, A.I., and Escape from the Matrix of Domination

Interventions over Predictions: Reframing the Ethical Debate for Actuarial Risk Assessment

Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification